Ask Questions and Experiment

- Visarga H

- Oct 27, 2023

- 2 min read

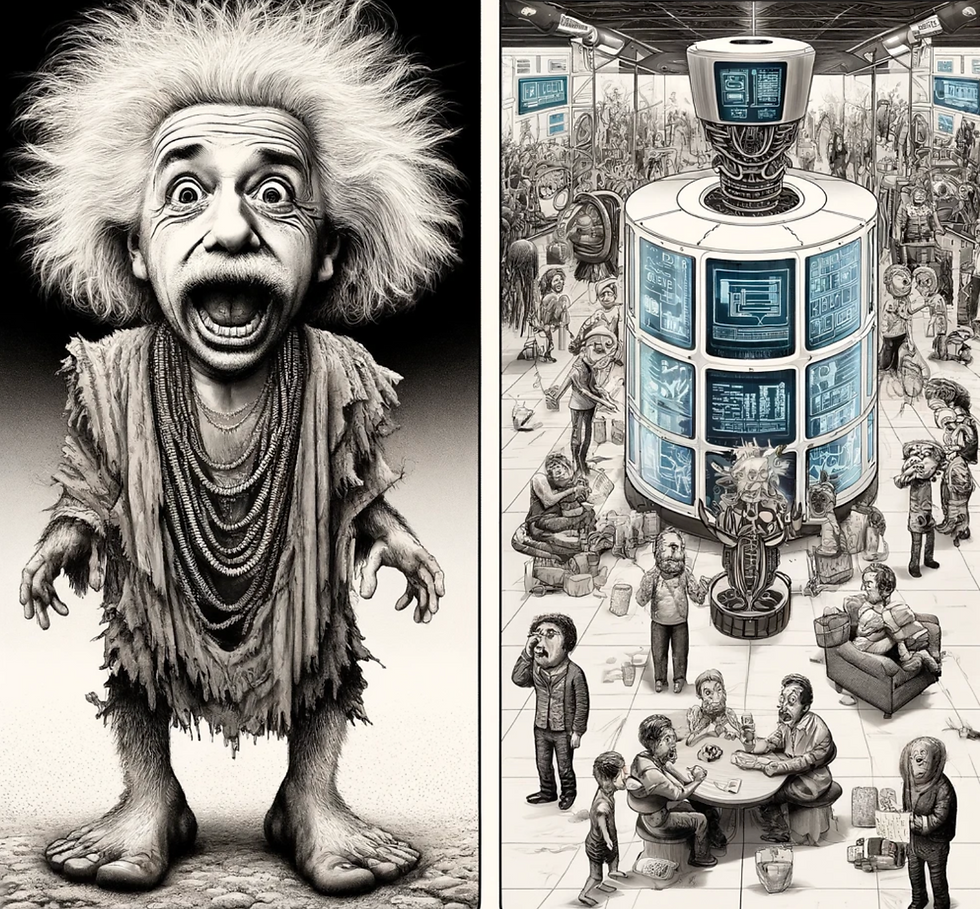

The Quest for Artificial General Intelligence

The long-standing goal of artificial general intelligence (AGI) remains distant despite the rapid advances in AI over the past decade. While narrow AI has achieved superhuman performance on specialized tasks like image recognition, game playing, and language translation, existing systems lack the flexible reasoning, contextual understanding, and transferable learning capacities exhibited by human cognition. Bridging this gap to achieve human-level artificial general intelligence will require fundamental breakthroughs in multiple areas.

Recent Progress Towards AGI

The emergence of large language models like GPT-3 and GPT-4 represent important milestones. Their ability to generate nuanced, human-like text reveals increased mastery of linguistic patterns, basic reasoning, and common sense. Meanwhile, multimodal models like DALL-E 3 demonstrate surprising visual creativity and intuition. Although still narrow in scope, these systems point towards the prerequisites for more capable AI: the accumulation of broad world knowledge and its grounding in realistic reasoning.

Key Challenges on the Path to AGI

Despite impressive progress, major gaps remain across all facets of intelligence including abstraction, imagination, goal-setting, self-reflection, theory of mind, and debugging abilities. The brittle nature of today's AI underscores the need for advances in robustness, generalization, and error handling. Even basic cognitive capabilities taken for granted in humans, like maintaining a consistent persona and worldview, lie far out of reach for current systems. Reproducing the innate common sense that allows humans to operate in the ambiguous real world remains an monumental challenge.

The Central Role of Knowledge and Reasoning

Bridging these gaps ultimately reduces to the representation, reasoning over, and acquisition of diverse knowledge and experience. Architectures and training approaches must focus on encoding rich world models, learning causal patterns, grounding language in reality, and accumulating wisdom through interaction. The raw memorization of large datasets alone appears insufficient absent more systematic techniques to extract structured knowledge. Combining neural learning with symbolic logic and reasoning techniques may provide a path to higher-level cognition.

Towards Active Machine Learning

Rather than the passive ingestion of training data, the path forward relies crucially on AI systems becoming active learners. Large language models offer a seed capability to intelligently study and enhance their own training data through summarization, connection building, visualization, and augmentation. This machine studying paradigm allows subsequent generations of systems to learn more efficiently and capably from enhanced datasets. Active learning also suggests a need for architectures that support hands-on experimentation, asking clarifying questions, and efficiently incorporating real-world feedback to form accurate mental models.

Comments