Deconstructing Model Hype: Why Language Deserves the Credit

- Visarga

- Oct 26, 2023

- 2 min read

Updated: Oct 30, 2023

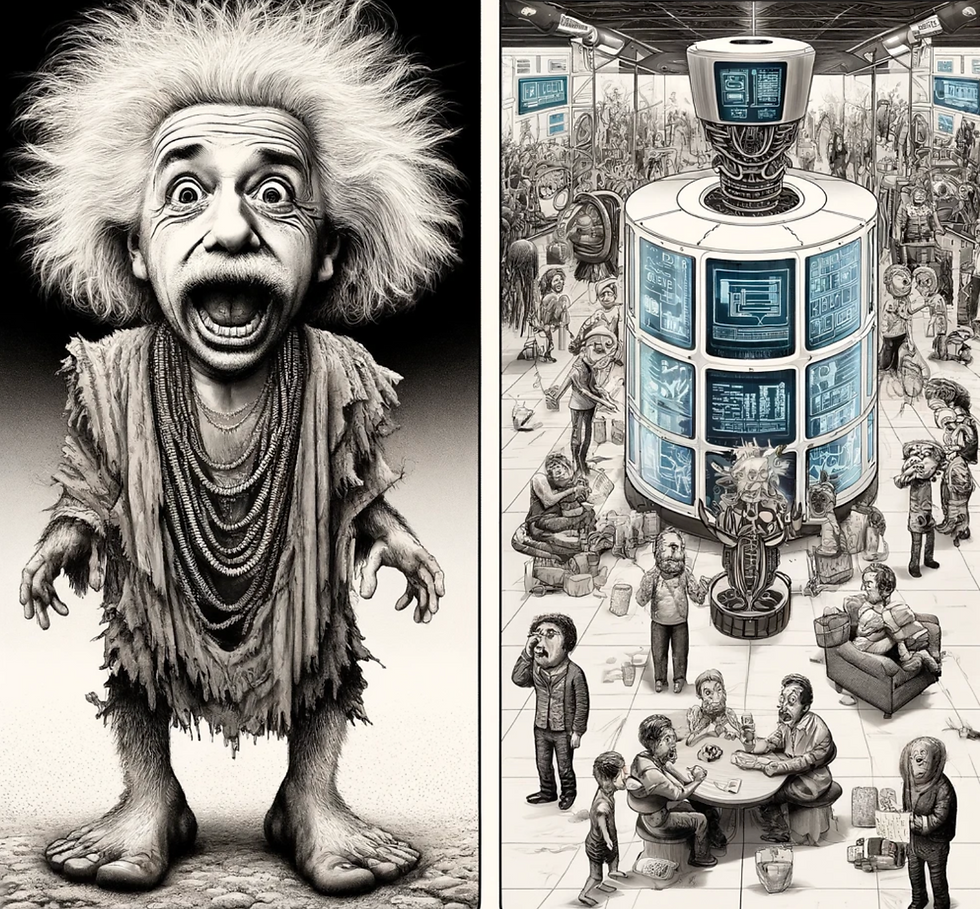

The impressive text generation abilities of large language models, such as GPT-3, has led many to mistakenly credit their architectures for possessing cognitive abilities. This perspective risks sidelining the genuine hero: language itself, which serves as a reservoir of encoded knowledge. By envisioning language as an operating system, a "language-OS" running on top of neural nets, we redirect the acclaim from mere models to the intrinsic power embedded in words.

Advocates of such models often forget about language and only discuss about models. However, it's crucial to understand that these models start as tabula rasa – their capacities are exclusively shaped by exposure to human textual information. The allure of "foundational models" often obscures this reality. In the absence of the skills infused by language, these models would hold no value. It is language that equips them with the protocols needed for learning, thinking, and communicating. The efficacy of models lies not in their innovative design, but in how deeply they've been enriched with human conversation, serving as a proxy for real-life experiences.

Consider this analogy: if a group of students is taught the same curriculum, their acquired skills will be (more or less) analogous, irrespective of their inherent brain variances. But we know that every brain is different. Similarly, if two distinct AI models, comparable in size, are trained on identical data, their acquired skills will mirror each other. The architecture of the model isn't the determinant of its capabilities; it's the training data that holds the key. Now, if these models are trained on diverse data sets, their abilities will differ, mirroring the information each dataset embodies. This underscores that the efficacy of a model is less about its structure and more about the richness of the language data it's exposed to, as long as model architecture meets some basic requirements: perceiver, big bird, linear transformer, performer, reformer, longformer, s4 and the rest of the zoo.

It's imperative not to let these models overshadow the monumental human effort behind encoding this vast knowledge. The pinnacle of AI is a reflection of our collective wisdom, documented over time, rather than any standalone machine intelligence. Recognizing language as the bedrock of intelligence propels us towards a clearer understanding of the intricate web of information that lends potency to our words. Language is the software; models are simply the processors. To truly grasp the essence of our world, we must delve beyond models and into the intricate tapestry of symbols, waiting to be interpreted.

This viewpoint reshapes our perception of AI's trajectory. It emphasizes the significance of the latent power in our symbolic representations over the specific structures that bring them to life. The intelligence of our models is intrinsically tied to the depth and breadth of the language they're nurtured with, intelligence is a collective thing.

Comments