Language as the Core of Intelligence: A New Perspective

- Visarga

- Nov 20, 2023

- 3 min read

Updated: Nov 22, 2023

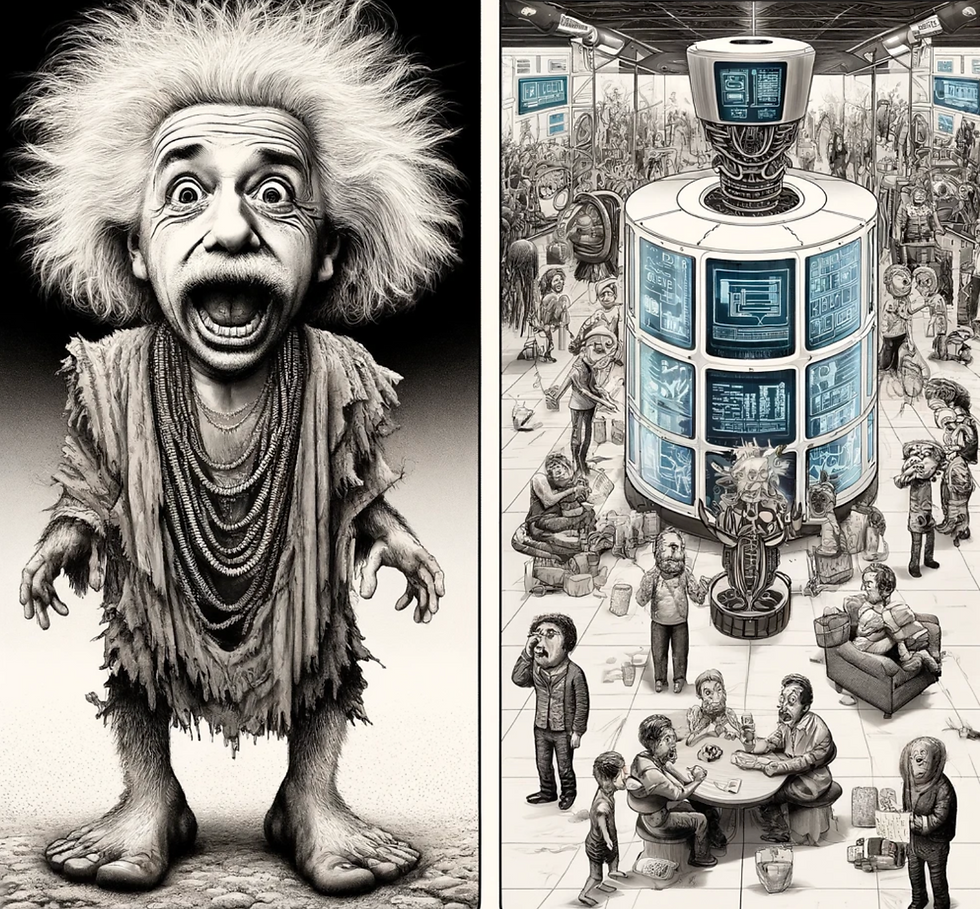

The advent of large language models (LLMs) like GPT-3 has sparked debate on the nature of intelligence. Their ability to generate remarkably human-like text after training on vast datasets suggests much of our cognitive abilities may arise simply from processing linguistic patterns. This leads to an intriguing hypothesis - could language itself be the core “operating system” of intelligence, with human and artificial minds essentially executing the knowledge and algorithms encoded within language?

I believe language forms an evolutionary knowledge system that profoundly shapes thought in both human brains and AI systems trained on text. In this view, the collective intelligence accumulated over generations and crystallized in language provides the foundational algorithms for general reasoning and problem-solving. Our biological brains and bodies mainly serve to contextualize this linguistic cognition, providing real-world experiences that condition the application of language-driven thought. But the core intelligence manifest across domains arises from the structured symbolic knowledge inherent to language itself.

This perspective sees language as far more than just a communication protocol. Rather, it is an external scaffolding that has co-evolved with the human mind in a tight interplay, gradually encoding inferential mechanisms and causal models within its structures. Language is not an instrument for representing pre-existing thought; it is the driving force in the construction of meaning and thought itself. The pragmatic frames, metaphors, and problem-solving tropes built into language provide a developmental “training environment” that shapes neural learning and cognition in the child’s brain. But language persists as an independent system carrying this distilled collective intelligence even when disembodied, as seen in LLMs.

This hypothesis powerfully recasts both human and artificial intelligence as fundamentally based in the execution of linguistic knowledge. It shifts the focus from neural computation in brains or model architectures in AI to the structures of knowledge within language itself as the main driver of generalizable reasoning abilities. Human brains are still far more adaptively complex processing systems than the most advanced AI today. But in this view, the essence of our cognition - the flexible thinking, problem-solving, planning and inferential capacities manifesting intelligence - arises largely from learning to execute the “Language OS” that distills the hard-won knowledge of generations into symbolic constructs.

The implications are profound - both humans and AIs exhibit intelligence by internalizing and executing the logic embedded in the cultural repository of language. The key difference may lie in how each applies this linguistic cognition. Humans ground it within our subjective lived experience and social contexts, enabling wise and compassionate application. Meanwhile, LLMs employ it mechanically based on their training corpora, without deeper human values or judgment. Integrating lived, embodied experience may therefore be crucial for advanced AI to contextualize and guide linguistic reasoning towards benevolent outcomes.

This perspective provides a novel lens to understand intelligence as arising from language more than localized computation in biological or artificial neural networks. It moves us to reflect on how to develop AI that complements rather than replicates human capacities, combining the statistical knowledge of LLMs with the grounded wisdom of human intelligence. The interplay of language, embodiment and ethics may hold the key to create AI able to learn from our best intuitions and put collective knowledge to work for the greater good. Understanding the sources of cognition is crucial for steering the future of intelligence.

In conclusion, the hypothesis of language as the core algorithmic engine of thought provides fertile ground for re-examining the foundations of intelligence. While further research is needed, it suggests promising directions like focusing on the knowledge encoded in language itself, designing AI systems that integrate grounded real-world experience, and emphasizing human values to guide linguistic reasoning. As we unlock the secrets of cognition, we must remain committed to creating AI that enhances rather than replaces the most uplifting aspects of the human spirit. Our deepest hopes for the future may depend on it.

Comments