The Promise of Machine Studying

- Visarga H

- Oct 26, 2023

- 2 min read

Updated: Nov 5, 2023

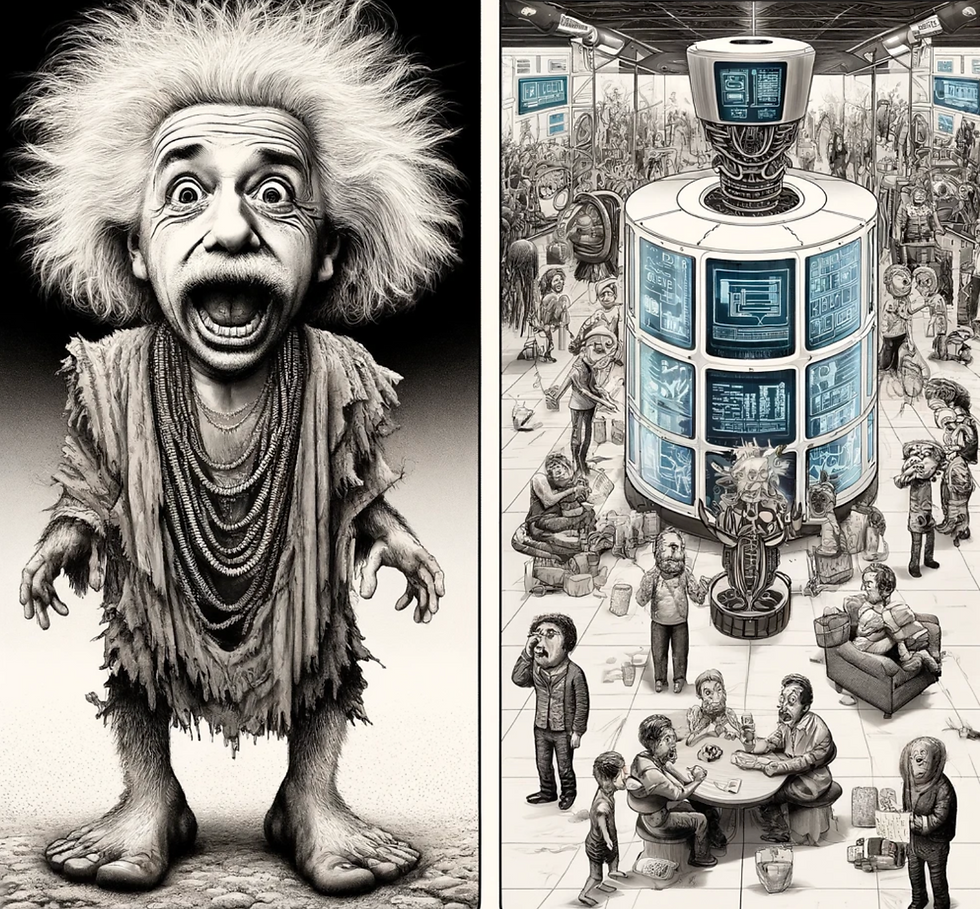

Recent advances in large language models (LLMs) like GPT-3.5 and GPT-4 have demonstrated an astonishing ability to generate coherent, human-like text. This has opened up new possibilities for using these models not just to directly perform tasks, but also to improve the training data used for other AI systems. In a technique I'm coining "machine studying", LLMs can be used to study and enhance datasets before they are used to train smaller, more efficient models. This allows the smaller models to benefit from the LLMs' greater contextual understanding and reasoning abilities during training.

Machine studying has already shown promising results. For example, Microsoft's Phi-1.5 model achieved abilities comparable to models 5 times its size after being trained on 150 billion examples generated by GPT-4. The key was that GPT-4 was able to take the original training data and generate explanatory examples that connected concepts and provided chain-of-thought reasoning. This enriched data then enabled the smaller Phi-1.5 to learn more broadly and deeply. Similarly, open source models like Orca are pre-trained on datasets containing demonstrations and input-output pairs from GPT-3.5. By learning from the large model's step-by-step reasoning, smaller models like Orca gain stronger generalization and inference abilities.

Crucially, machine studying helps overcome the "reversal curse", where models trained on simple input-output pairs struggle to work in reverse. For instance, a model trained that "A is the father of B" does not necessarily infer "B is the child of A" at inference time. By having the LLM walk through explanations, the training data gains the contextual reasoning required to make bidirectional inferences. Information is connected across examples rather than remaining fragmented.

There are several ways to implement effective machine studying. LLMs can be fine-tuned on training datasets to generate additional examples, explanations, and solutions. By prompting for clarity, reasoning, and diverse formulations, models like GPT-3.5 can elucidate the hidden connections within data. LLMs can also be coupled with search, symbolic reasoning, and code execution systems to deeply explore the training data. By simulating solutions or working problems step-by-step, LLMs can gain a causal, multi-step understanding of the data.

As the enhanced training sets produced by machine studying propagate through successive generations of AI systems, capabilities are expected to compound rapidly. Each iteration will have more thoroughly studied data to build upon. This could greatly accelerate progress in key areas like common sense reasoning that require broad knowledge.

Of course, there are still challenges to address. Running the LLMs for studying requires significant compute resources. Care must be taken to avoid bias and overfitting during the iterative process. There are also open research questions around how best to generate and select explanatory examples.

Nonetheless, machine studying stands out as a promising path to unlock more efficient and capable AI. By imbuing smaller models with the reasoning capacities of their larger cousins, previously unattainable feats could be within reach. And even larger models stand to benefit from having better training sets.

Comments