Teaching AI to Study and Learn Like Humans

- Visarga

- Nov 5, 2023

- 2 min read

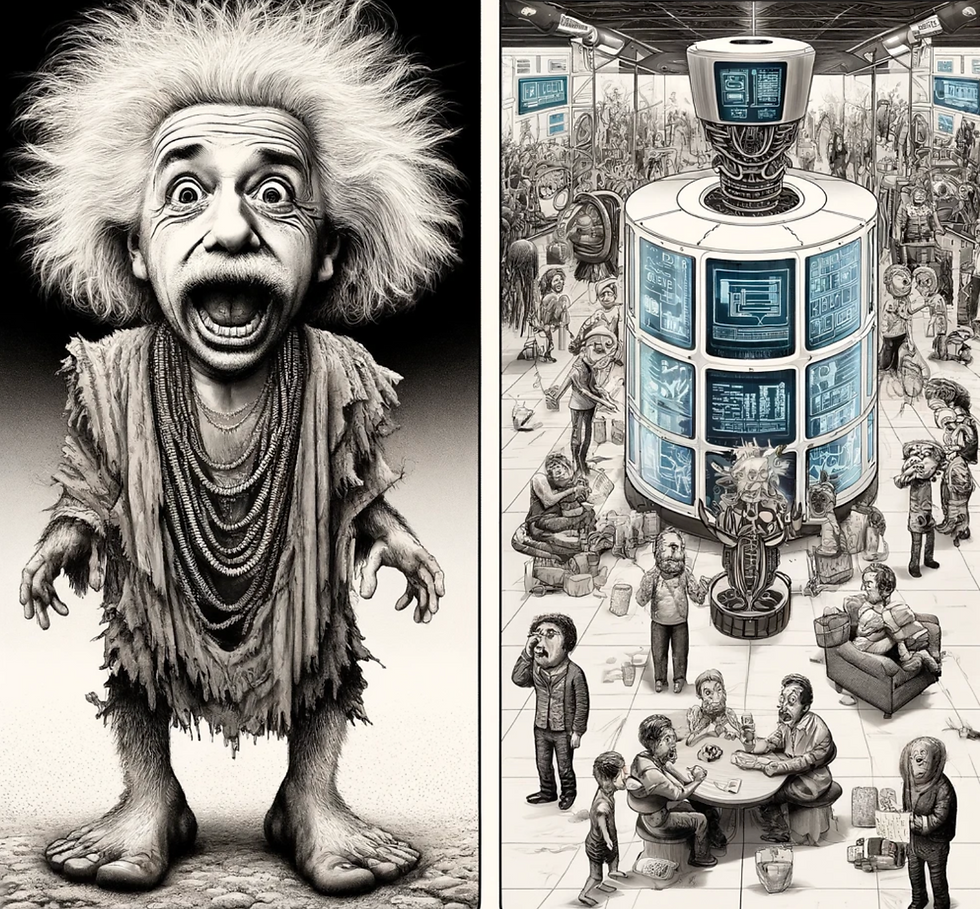

LLMs have made remarkable progress, however, these systems still struggle with key aspects of intelligence like drawing conceptual connections between information. In this article I expand the "machine studying" line of thought started here and here. In this article I propose a technique called Preference-Amplified Synthesis Training (PAST) that aims to address these limitations.

The key idea behind PAST is that humans don't just passively take in information – we actively study it by making inferences, filling in gaps, and refining our mental models. PAST seeks to instill this same “study” process in AI systems by having them enrich their own training data.

During PAST training, an AI generator would create multiple possible completions for each training example. For instance, given a short text snippet, the system might generate several longer versions that infer additional context and reasoning.

In order to update the model we use dDPO (Lewis Tunstall, 2023), an optimised method for fine-tuning LLMs on preference data. By training the main model on this refined data, the goal is that it would learn to make the kinds of connections and inferences humans would. I believe models trained with PAST could develop stronger reasoning abilities and a broader, more human-like understanding because instead of focusing on the short-term task of next token prediction it updates the model with gradients from the preference prediction over the full completion.

PAST could also help overcome key training challenges like the “reversal curse,” where models fail to work inputs and outputs in reverse by forcing the model to make deeper connections.

The automated studying process means models could continuously enrich their own training without ongoing human involvement, relying instead on a preference model. It is thus learning both from the raw text and the preference model at the same time. Models tend to be smart at test time, especially when using chain-of-thought, but they are dumb memorisers taking all shortcuts at training time. With PAST we want to benefit from test time smarts at training time.

Of course, PAST faces challenges around computational costs and strategies for synthesizing optimal training examples. Nonetheless, by amplifying human-like judgment and study skills during training, PAST represents a promising step toward more capable AI. Teaching machines to learn more like humans could enable abilities previously unattainable.

Comments